2. What is Fairness?¶

Throughout the course, we will take a practical view of understanding fairness in algorithmic decision making: how can we identify potential issues with a system, understand its impact on people with whom it interacts, and what should we do about it.

This lecture will introduce terms and concepts from philosophy that will help us approach these questions. We will briefly discuss terms like fairness, justice, welfare, equality, equity, bias, and discrimination and how they frame discussions about decision making as a society.

2.1. Example: Medical decision making¶

As an introductory example, we’ll examine the issues involved in rationing medical care, specifically ventilators, during the COVID-19 pandemic [SCW20].

People with severe cases of coronavirus experience trouble breathing and medical treatment often consisted of putting the patient on a ventilator for weeks at a time. During the height of the COVID-19 pandemic, many hospitals filled beyond capacity and experienced a shortage of ventilators. The decision became: which patients receive access to a ventilator?

Should only those with the highest chance at recovery receive a ventilator?

This option attempts to optimize the existing (scarce) stock of ventilators and falls into a utilitarian point of view. However, there are a few objections to this:

What if ventilators are sitting unused in anticipation of future patients with a higher chance of recovery?

How is “chance of recovery” measured? Those with a better chance of recovery may only be in better health because of prior access to quality health-care.

Other options might suggest that patients have, in some sense, an equal right the needed ventilators:

Should patients entering the hospital receive ventilators on a ‘first-come first-served’ basis?

Should anyone in need, regardless of access to health-care, have an equal chance of receiving a ventilator?

In practice, healthcare systems blend these two points of view [SCW20]. We will look at these concepts, and continuing analyzing this example through this lecture.

2.2. Notions of fairness, welfare, and justice¶

Initially, we will focus on concepts of fairness, welfare, and justice in the context of allocative decision making by a state or organization. This entry point is reasonable, as

algorithmic decision making systems usually make decisions for others, and

discussion of allocative decisions are plentiful, accessible, and important.

At the end of this lecture, we’ll explore how these notions apply in other settings.

2.3. Utilitarianism¶

First developed by Jeremy Bentham and John Stuart Mill, utilitarianism is a doctrine that states:

Only the consequences of actions determine what’s right and wrong (consequentialism).

The right action is one that ‘provides the greatest benefit to the most people.’ (utilitarianism)

The perspective of maximizing aggregate social welfare has a notable consequence: a person may be treated poorly for the good of others. The thought-experiment illustrating is consequence is the famous trolley problem:

A trolley is heading toward five people working on the track. You can pull a lever and redirect the trolley to a track that has only one worker. Would you pull the lever, deciding to kill one worker to save the other five?

A utilitarian might describe the utility of pulling the lever to be five times greater than not.

Question

What are the reasons you wouldn’t pull the lever?

Which of these reasons are objections to the consequentialist component? (part 1)

Which of these reasons are objections to the utilitarian component? (part 2)

Are there reasons for objecting to pulling the lever that are consistent with utilitarianism? (e.g. how the total utility is calculated).

The ventilator example illustrates a similar observation:

Would you hold back the use of a ventilator for a patient that might arrive later who is more likely to recover because of it (or recover more quickly)?

Would you take away the ventilator from a patient already using it if another patient that might receive greater benefit from it needs one as well?

Even for the committed utilitarian, the answers to these questions depend on how you define ‘benefit’ and calculate the utility of making a given decision. The study by Savulescu et al. examine some of these choices, including maximizing number of lives saved, the aggregrate number of years-of-life saved (valuing healthy over frail; young over old).

Public health professionals working in healthcare systems make these estimates daily, in a multitude of ways. Of course, these professionals also take into account legal, professional, and moral reasons in making their choices of benefit and utility. We will address these later.

2.3.1. Utilitarianism and machine learning¶

This theory aligns with the basic organization of a machine learning powered decision making system. Fitting a model optimizes a loss function over the training set. The features are used to approximate potential benefits (often captured via a label), while the loss function corresponds to the utility. By default, a model makes better decisions when it optimizes loss of the entire training set.

Question

Sketch the structure of a model that decides whether an new patient should receive a ventilator. Begin with the following components:

A model that, given information about the patient (place of residence, age, medical history), predicts the number of days they would use a ventilator.

The current state of the healthcare system (number of patients arriving per day, with given medical information).

A utility function to optimize (think about what this might be).

What are some morally problematic behaviors of this model? What do you think it should or should not use in making its decisions?

2.4. Distributive Justice¶

A theory of distributive justice describes ‘a just distribution of relevant goods in a society.’ The qualifier of ‘just’ is in response to utilitarianism’s unjust distribution of goods in the name of maximizing the aggregate welfare of the people. What qualifiers and limitations should be placed on such distribution to make them morally acceptable?

Three closely related limits that address just distribution are equality, priority, and sufficiency [Val03]. Limiting optimal distribution by a condition that ensures, in a broad sense, people benefit equally is described by theories of egalitarianism. Prioritarianism attempts to maximize goods first according to those most in need. Sufficiency places a floor on the sufficient distribution of goods, so that all basic needs are met, after which goods are distributed to maximize welfare.

Note

The term ‘goods’ here is broadly defined as anything that positively contributes to a persons welfare (tangible goods, happiness, opportunity).

In the most naive way, an egalitarian theory might limit the distribution of goods to be equal for everyone. However, in light of the broad notion of ‘goods’, any such theory would be unreasonably restrictive. Moreover, even if one only requires equality of total utility for each person, one has to confront the effect that drastically different choices in life make on that sum-total. One way of approaching this issue is by stipulating that everyone has a right to the same opportunities in life.

The remainder of this section follows [Arn15].

2.4.1. Formal Equality of Opportunity¶

Equality of Opportunity (EO) is a condition that seeks to restrict distribution of goods in a fair way. There are several notions of EO that refine the condition of ‘equal’ in ways that lead to different results in practice. Various notions of EO are codified in law in countries around the world.

Formal equality of opportunity requires that a benefit is theoretically available to anyone, regardless of their background. For example, an open job position that satisfies Formal EO requires the employer to open the pool of applicants to everyone and that those applications be consider based on merits (as opposed to, for example, nepotism).

In the example of the distribution of ventilators, formal equality of opportunity is not violated. Indeed, all patients that enter the hospital are considered for treatment with a ventilator; the choice of who receives one is based purely on ‘merit’ (conditions that are equally applied to all individuals). It is not the case that ventilators are only reserved for donors to the hospital.

2.4.2. Fair Equality of Opportunity¶

One limitation of Formal Equality of Opportunity (FEO) is that while the distribution of goods is open to all, the ability to take advantage of such an opportunity may effectively be non-existent. For example, a job may be available through passing an arbitrary examination that only very wealthy pass (because only they can afford the training). While this job is theoretically available to everyone (satisfying formal EO), it in practice is only available to the very wealthy.

John Rawls developed the concept of “fair equality of opportunity” that modified the EO statement so that, “assuming there is a distribution of natural assets, those who are at the same level of talent and ability, and have the same willingness to use them, should have the same prospects of success regardless of their initial place in the social system.”

[Arn15].

To illustrate this ideal, Rawls envisioned a though-experiment he coined ‘The Veil of Ignorance’: imagine that you are proposing a certain distribution of goods in a hypothetical society. Would you consider the distribution just if you did not know who you would be in this society?

The Veil of Ignorance separates (dis)advantages given by circumstances like to whom you were born from those circumstances that come from conscious choice throughout life.

In the example of ventilator distribution, a patient born with a disability should have an equal claim to a ventilator as someone without a disability, even if that disability affects complicates potential recovery.

In [HLG+19], the authors translate FEO into mathematical terms as follows. Suppose

\(e\) refers to the choices that one puts into life (talent, effort, ambition). These are, according to FEO, the legitimate sources of inequality that arise in the world.

\(c\) refers to circumstances beyond ones control. \(c\) captures the conditions from birth that may affect life’s outcomes (e.g. socio-economic status at birth).

\(F(\cdot|c, e)\) is the cumulative distribution of utility at fixed effort \(e\) and circumstance \(c\).

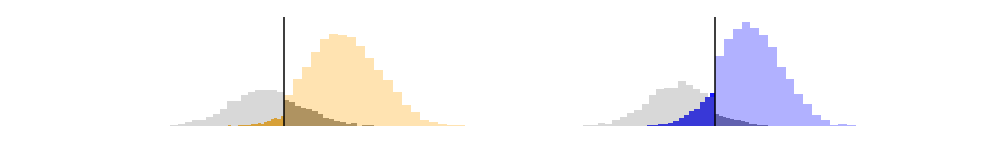

The Rawlsian FEO states that for all circumstances \(c, c'\) and a fixed \(e\):

That is, the cumulative utility depends only on \(e\).

2.4.3. Luck Egalitarianism¶

Conceptions of Equality of Opportunity dictate conditions under which it is just to gain an advantage over others in society. On the other hand, Luck Egalitarianism demands that differences in the goods that people accumulate should only be determined by choices people make and not by differences in unchosen circumstance throughout their life (i.e. luck).

In the example of ventilator distribution, a patient systematically denied access to the healthcare system (e.g. by being born into poverty) may have a greater claim to a ventilator (in spite of being in poorer health), so that the expected benefit equals those with easy access to healthcare (and thus more likely to be healthy, arrive at the hospital less sick, etc). This is untrue of FEO.

Luck egalitarianism is also called ‘The Level Playing Field’ ideal because of its relative view of legitamate sources of inequality. That is, if two people share similar circumstances, then equal ‘effort’ should result in equal utility.

Quantitatively, as derived in [HLG+19], suppose

\(c\) refers to circumstances beyond ones control (i.e. ‘luck’),

that \(0 \leq \pi \leq 1\) is the \(\pi\)th quantile of the distribution of effort of individuals under circumstances \(c\),

\(F(\cdot|c, \pi)\) is the cumulative distribution of utility at circumstance \(c\)and effort-quantile \(\pi\).

Luck Egalitarianism is satisfied if for all \(\pi\in[0,1]\) and for any two circumstances \(c,c'\):