Fairness across the ML Pipeline

Topics

- Approaching fairness across the ML Pipeline

Reading (Required)

This paper approaches pre-processing a dataset via ‘label-flipping’ and ‘re-weighting’, in order to impose demographic parity:

This article discusses how even a fair algorithm may result in unfair circumstances (an intro to next week’s topic):

Reading (Optional)

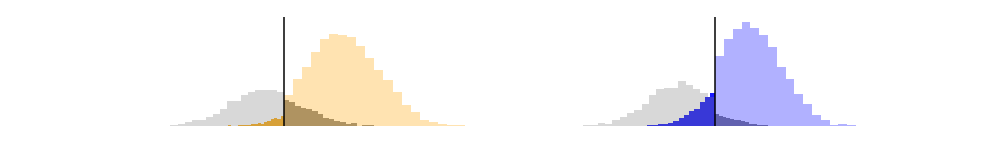

This paper approaches pre-processing a dataset by changing the distribution of attributes:

This paper using the in-processing approach of adding a penalty to the loss function to better learn fair model parameters:

Reading Responses

-

Implement each of the preprocessing techiniques done in lecture using Pandas. Turn in code for the ‘label flipping’ algorithm (a function that takes in a dataset and returns a balanced dataset).

-

In the Financial Times article, discuss issues of fairness at stake through the lense of Fair Equality of Opportunity and Luck Egalitarianism. Be explicit about the utility being considered, the model (a regressor, not classifier), and the decision making involved. Where in the pipeline do you think fairness should be judged?